Introduction

When we take a photograph outside, we are capturing light that has travelled 150 million Kilometres (1 au), scattered in the atmosphere, bounced on an object, to finally be captured by the camera sensor and stored as numbers in an image file. The rendering process in Computer Graphics is basically the same, but the world happens to be virtual, so the image numbers come from a simulation inside the computer.

There are many publications and books on Computer Graphics and it can be overwhelming at times for people who want an introduction to rendering & imaging. Most books explain how light gets transformed until it gets synthesised into an image. In this article, though, I’ve tried to do the journey backwards, to help people troubleshoot the possible things that have gone wrong when they feel a pixel in the screen looks funny or the wrong colour. You can follow the journey with me, or you can skip directly to some practical tips I list up in the last section.

Your eyes: the end of the journey

Whatever image we produce, ultimately is going to be consumed by human eyes. And what I perceive may not necessarily be the same as what you perceive. Perception is a psychological process that includes sensation, memory, and thought and results in meaning such as recognition, identification and understanding [1]. What a person sees and experiences is called a percept (the product of perception, which is a process). What a person reports seeing is a verbal attempt to describe a nonverbal experience.

I’m not going to discuss visual perception in detail, but I’ve started the discussion with this because more often than not problems with images are simply a mismatch of expectations. Specially if all the communication, from a person requesting an image to the final evaluation, has been verbal. Let’s use the famous Adelson’s checker shadow illusion as an illustration:

The squares marked A and B are the same shade of gray.

Because our brain reconstructs the 3D scene it’s seeing, it knows that square B is lighter than square A. But in terms of pixels, both A and B have exactly the same shade. You can verify this by using a finger to connect both squares. Knowing this, you can recognise this request as ambiguous: “could you make B brighter?”. There are many ways to achieve that, all with very different results: paint B brighter, making it stand out; increase the overall exposure of the image, making A also brighter; add a spot light directed towards B making it and its surroundings brighter, and so on. If you want to see more of these visual tricks, I recommend you the book Mind Hacks [2].

What are the colours of shadows?

As seen with that example, a common misunderstanding in communication is mixing up the brightness of an object with the brightness of the light. This applies to colour as well. If I ask you for the colour of a shadow outdoors, would you say it’s grey, blue, or the colour of the object where it’s projected, e.g., green if on grass? In terms of pixel values, shadows outdoors are blueish, because that’s the colour of the ambient light that comes from the sky. This is also the reason why it is preferable to sketch drawings using a blue pencil if you are going to colour the drawing later. But many won’t explicitly perceive those shadows as being blue.

A dot in a screen

Let’s assume that the image you are seeing is in a screen, although similar discussions will apply to printed form. Many things can go wrong when displaying an image on a screen:

- different monitors have different colour gamuts;

- depending on the technology, the brightness of a pixel can affect the brightness of the pixel next to it;

- even when using the same model of a screen, users can have different colour temperature settings, automatic time-of-day adjustments, colour filters, or even physical screen filters.

Warmer colours by decreasing the colour temperature

The correct thing to do would be that all parties use a colorimeter to calibrate their monitors, but more often than not colour calibration is only a must for people digitising the world, e.g., to make sure a material they photograph appears the same on screen. For other people I often recommend they download the image into their iPhones or iPads. This has the advantage that it’s a well-known screen, and it should look the same for everyone, provided that they don’t have any screen filter and that they have disabled the Night Shift. Recent smartphones screens use OLED displays, which do not suffer from brightness bleeding, and provide a wider colour gamut, Display P3 on Apple devices. Apple software also tends to do the right thing with the image, that is, they handle colour profiles correctly. Which brings us to the next topic.

Pixels in a file

Mainly 3 things can go wrong when displaying an image from a file: wrong colour profile, quantisation artefacts, and encoding artefacts.

Colour profiles

Comparison of some RGB and CMYK colour gamuts on a CIE 1931 xy chromaticity diagram. Source: Wikipedia

A colour profile describes the colour attributes of a particular device, and how to map between different colour spaces. I recommend reading a previous article where I introduced the concept of colour spaces. But for now just bear in mind that if different screens have different gamuts and different colour capabilities, we would need a way to convert between them, without losing information whenever possible.

Errors here can happen when saving the file, and when displaying it. When saving the file, we could working with a particular colour profile, e.g. Adobe RGB, and then forget to embed the profile in the file that we save. Or the format that we choose as output does not accept colour profiles. Then, the software displaying that image will assume a default colour profile, which it’s usually sRGB. Colour will look wrong. But even if we do embed the colour profile, not all software process it correctly. For instance, some browsers assume PNG images are always sRGB, and ignore their embedded colour profile. And yet again, even if the software does understand colour profiles, we need a rule to convert from one space to another, the render intent. The default may not be what we expect. In the example below, I loaded an image in sRGB colour space (the right image) into Photoshop (left image). Photoshop has converted the colour space to Adobe RGB. To make things more complicated, I have then taken a screenshot of Photoshop and the original image in macOS Image Preview, and what the screenshot does is storing the colour profile of my monitor in the resulting image. The important thing to notice is the colour shift that happens in Photoshop:

sRGB image loaded into Photoshop has its colours shifted by default. Drawing generated with AI Gahaku.

In the previous post, I also give some testing images in Display P3 colour space. In general, for images on the web I recommend sticking to sRGB, unless we are specifically targeting Apple devices, where we could use Display P3 to make use of the wider gamut.

Another word of warning is the common practice of taking screenshots. When you take a screenshot, at least with the default tool on macOS, it embeds the colour profile of the screen where you took the screenshot, Display P3 in many cases. Say you drag&drop that screenshot to some online slides. The screenshot could be wrongly read as if it were in sRGB, and the colours would look wrong. If the original thing we wanted to embed was in sRGB, it would have been better to drag that image instead of the screenshot.

Quantisation artefacts

Quantisation is needed to convert a continuous signal into a discrete one. Most of the images we see online use 8 bits per colour channel, which means only 256 distinct values. When using three colour channels, like in RGB colour spaces, that would make for a total 16 million colours when combined. It sounds like a lot, specially considering that the human eye can distinguish just up to 10 million colours, but it’s not enough to cover gamuts wider than sRGB.

To avoid quantisation problems, we need to store images with more bits per channel. PNG images in Display P3 will use 16 bits per channel. Without considering the alpha channel, that’s a file up to 3 times bigger. For instance, the image below was originally a 295KB 16-bit PNG image in Display P3 colour space. I have converted to a normal sRGB image, with 8-bits per channel, and the PNG size became 75 KB. In comparison, the image to the right only uses a 256-colour palette, and occupies 25 KB on disk.

8-bit sRGB (left) vs 256-colour palette (right). Image generated with Palettist.

Quantisation artefacts usually translate into banding artefacts. These banding artefacts are more visible in changes of luminance than in changes of colour, though. The quantisation I did with the image above when I converted it to 8-bit has introduced some banding, although it is probably hard to notice. The 256-colour image to the right should help illustrate the banding that occurs when you don’t have enough colours.

High Dynamic Range (HDR) images also require more bits per pixel in order to store a wider luminance range. Because most screens have a limited luminance range, HDR images have to be tone-mapped into Low-Dynamic Range before displaying. That could simply imply selecting the exposure, or applying some artistic post-processing. Most HDR TVs use a 10-bit encoding for encoding both a higher luminance range and a wider RGB gamut. TVs then come with a series of preset filters that tone-map the signal in different ways. But if the assets in a game are not HDR-ready, no matter that your HDR-ready Playstation 4 is connected to an HDR TV, you may still see some banding artefacts.

Encoding artefacts

This is probably the easier problem to spot and the easier problem to solve. Images would be very big if stored as raw pixels. On a 4K TV, images are 4096 × 2160 pixels. If we use 16-bit RGB images, the raw size will be 4096 × 2160 pixels × 6 bytes/pixels, approximately 50 MB for a single image. But images contain lots of redundancy, both spatially and in frequency, so fortunately we can compress them.

The most common formats on the web are still PNGs and JPEGs. PNG images use lossless data compression, so the resulting images are still a bit big. JPEG compresses much more, but it suffers from the block artefacts characteristic of the Discrete Cosine Transform (DCT). JPEG was supposed to be replaced by the JPEG 2000 (JP2) standard, which uses wavelet transforms to compress not only in the frequency domain as the DCT, but also in the spatial domain. It produces much better quality images for the same level of compression, and it also supports alpha blending, but unfortunately it is only supported widely in Apple devices. Recent browsers also support WebP, which uses DCT and entropy encoding. Not for the web, but also worth mentioning Open EXR, a format created by ILM that supports 32-bit HDR images, so often used as the preferred output of ray-tracers and renderers.

Below I’ve put some examples of the types of artefacts you will see if someone compresses the image too much using JPEG. Since we can’t recover what’s already lost, the only solution is asking the person who exported the file to export it again with better quality, or with lossless compression.

Illustration of block artefacts in JPEG

Light before turning into pixels

It’s time to get physical. Let’s assume we have a virtual 3D scene in our computer. That scene contains a series of objects, that is, geometry with materials assigned to them, a bunch of lights, and a virtual camera. Let’s discuss what needs to happen for it to become an image.

I’m going to cover mostly some equations on radiometry, the field that studies the measurement of electromagnetic radiation. For light, that radiation is the flow of photons, which can behave as particles or waves. The wave behaviour can mostly be ignored in rendering. Photometry studies similar things, but it weights everything by the sensitivity of the human eye. So even though most equations come from radiometry, many application use photometry units. The last important field is colorimetry, the field that tries to quantify how humans perceive light. The article about colour spaces gives more details. Here we just need to know that we can divide light into three separate signals, what we call the red, green, and blue channels, and that the equations presented here can be applied to each channel independently. I’ve tried to summarise here the most important things of the Advanced Shading and Global Illumination chapters in Real-Time Rendering [3].

The shading equation

The image that we see is a series of discrete values captured by a camera sensor, whether a real sensor or a virtual one from our renderer. The value captured by the sensor is called radiance (L), and it’s the density of light flow per area and per incoming direction. Here’s a quite complete shading shading equation used to compute radiance:

Eq. 1. Shading equation

It looks complicated, but it’s a quite straightforward sum of all the lights in the scene, because irradiance E is additive. The irradiance is the sum of the photons passing through the surface in one second. The units of the irradiance are watts per squared meter, equivalent to illuminance in photometry, measured in lux. Radiance is measured in watts per squared meter and steradian, equivalent to luminance in photometry, measured in nits, or candelas per squared meter. Let me roughly explain the terms in equation (1):

- L_o is the outgoing radiance, which depends on the view direction v.

- The first term adds the contribution from the ambient light. In an outdoor scene, that would be the sky. K_A is an ambient occlusion term, and c_amb is a blend of the diffuse and specular colours of the material. E_ind is the irradiance of the indirect light, which can be a constant. ⨂ is the piecewise vector multiplication.

- The number of light sources is n, and l_k is the direction of the k-th light source.

- υ (upsilon) is a visibility function, which corresponds to shadows for direct light sources.

- f is the Bidirectional Reflectance Distribution Function (BRDF). It describes how light is reflected from a surface given the incoming light direction l and outgoing view direction v.

- The final term is the irradiance of each light multiplied by the clamped cosine of the angle between the light and the surface normal. That means that if the surface is perpendicular to the light (the normal is parallel to the light), the surface will be fully lit, and in shade otherwise. The cosine is clamped because negative values are light below the surface.

Angle between light direction and surface normal

Let’s look at the BRDF as well. For most rendering needs, we can use the following equation:

Eq. 2. Blinn-Phong BRDF extended to include Fresnel

This is the Blinn-Phong model, extended to include a Fresnel term. The terms are:

- c_diff is the diffuse colour of the surface, the main colour that we will see in non-metallic objects.

- The next term is the specular light, very important in metals, but also present in most materials. m is the surface smoothness, and h is the half vector between l and v.

- R_F is the Fresnel reflectance, responsible of the increase of reflectance at glancing angles. It’s usually approximated by the Schlick approximation, an interpolation between white and the colour it would be when the light is perpendicular to the surface (i.e. when alpha is zero).

Again, all this looks complicated, but in practical terms what it means is that we will have a series of RGB values that can be arbitrarily big to represent the irradiance, that will get multiplied by RGB values normalised from 0 to 1 that represent the colour of the surface and the presence or not of shadow. Then, we will add all these values together. I wanted to put all the terms in here because if you understand what each term means, it can be easy to troubleshoot problems in the rendered image. For instance,

- if all the shadows look completely black, we have probably forgotten to add the ambient term, which in turn could mean we forgot to add an environment map (see next section).

- If the object looks all black, but you can see highlights, it could mean that c_diff is zero, so perhaps what you are missing is the albedo map (see next section).

- If the object looks too metallic, the specular term is to blame, so you need to check your material settings.

- If there is lack of contrast between lit surfaces and parts in shadow, it probably means that your lights are not strong enough.

- If your scene is outdoors but colours on objects do not look warm, perhaps the irradiance is monochromatic. If you are using an environment map, it could mean that the sun saturated to white when photographed. Did you capture light correctly?

Examples of unexpected material appearance. Garment created with VStitcher, and rendered with V-Ray.

Rendering techniques

Light doesn’t just travel from the light source to the object surface and then to the sensor, but it bounces around. Most real-time rendering methods use “tricks” or approximations to model global illumination. For instance, you can render the scene as if seen from the light to compute something called a depth map, and use that when you are rendering the scene from the main camera to figure out if a pixel is in shadow or not, that is, the value of the visibility function. There are many artefacts in real-time rendering, depending on the rendering engine that we are using: lack of soft shadows, jaggies in shadows, monochromatic ambient occlusion, lack of texture in clothes, and lack of material correctness in general.

Ray tracing is often used as the ground truth to see what a scene should look like. It is also used to “bake” or precompute textures that will be used in real-time renderers. Modern GPUs have some hardware capabilities to do ray tracing in real time, but it is still quite computationally expensive. Note that ray tracers do not usually follow the journey of light from the light source to the camera. Instead, as in this article, the journey of photons is followed backwards. It is way cheaper to project rays from the camera onto the scene, since we aren’t interested in rays that do not end up on the screen (or sensor).

Ray tracing has its own artefacts, mostly related to the quality settings of the ray trace. These are usually easy to spot. Because we can’t sample rays in every direction (because there are infinite), we need to quantise the number of directions we sample. That means using some kind of stochastic sampling of a sphere, like the Monte Carlo method. If there are not enough samples, there will be a distinctive noise in the images that looks like peppered shadows. When similar techniques are applied to real-time rendering methods, the number of samples is even lower, but they cheat by applying a blur filter to those shadows. In any case, if you are using a ray tracer and you see that kind of noise, you just need to give your simulation more time. Check the quality parameters.

Left: common pepper noise in ray-tracing. Right: after increasing quality settings.

Exposure and tone mapping

From the shading equation you can see that if you keep adding lights, radiance can only get bigger and bigger. In the camera, real or virtual, you would adjust the exposure to capture more or less light. The range we need to capture can be very big. LCD screens typically have a luminance of 150 to 280 nits, a clear sky about 8000 nits, a 60-watt light bulb about 120,000 nits, and the sun at the horizon 600,000 nits. It is important that you get all the units and values right when applying the shading equation, but more often than not we use values much smaller than what they are in real life.

As I mentioned earlier when talking about HDR, the renderer could output the image in HDR. If the HDR image is 16-bit, that would mean 65,536 luminance values. However, that doesn’t cover the whole range of luminance in real life. So we tend to apply some exposure setting to the light itself. This makes things slightly confusing, because we will have the exposure applied to the lights and then the exposure applied to the final image. In the ideal world, we would have all the lights defined with real-world values, store the result in a 32-bit (per channel) HDR image, and then either select the exposure ourselves, or apply some kind of tone mapping algorithm. In video games, what happens is that the image gets automatically tone-mapped based on the brightness of the spot you are staring at, pretty much like what our eyes do by opening or closing the pupils.

These exposure changes are hard to decouple, but in general if you feel the image is lacking contrast between lights and shadows, it’s often a problem with the light as mentioned earlier, and not a problem of the final exposure setting.

Left: increased exposure of side light; Right: increased exposure on the output image

Pixels that turn into light

We are coming full circle. All the problems that we explained regarding colour spaces and compression, and even perception, also matter in the virtual world. This is because we often capture the world using photographs and use them as assets in our virtual scene. I’ll talk here about textures and environment maps.

Textures

Images that wrap around a 3D object are usually referred to as textures. This process of wrapping is called texture mapping, so these images are also referred to as maps. They exist to save memory. In an ideal world where the 3D geometry was sufficiently detailed, we wouldn’t need textures. But as for now, we rely on them to tell us what’s between 2 vertices. It’s a way of “cheating”.

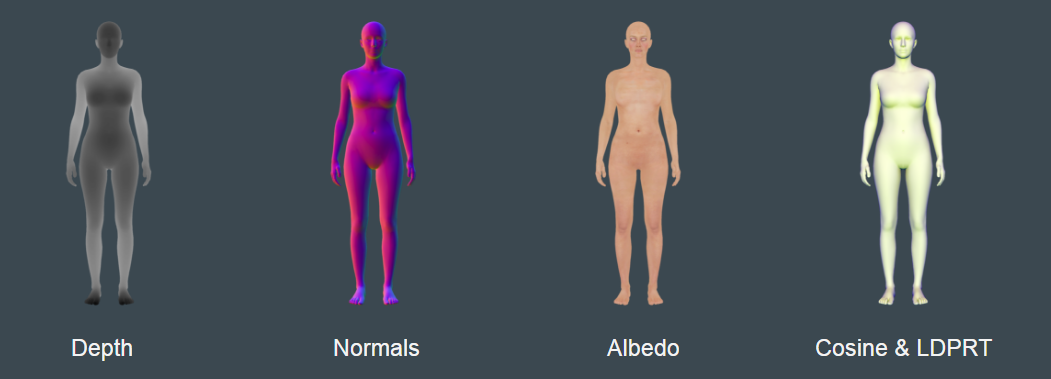

We use many different types of textures to describe the material of an object. I’ll discuss here mainly albedo maps and normal maps, but there are many others: specular maps, roughness maps, elevation maps, decals, and so on. Some are specific to a particular renderer.

Albedo maps show the diffuse colour of an object, the c_diff in equation (2). There’s no colour in absence of light, so albedo maps are in fact lit, but by a constant illuminant. Sometimes the albedo map contains some embedded shadows that come from ambient occlusion. This can help improving realism in real-time renderers, but it’s generally incorrect, because the colour of the ambient light will be wrong. If you see some funny shadows that you can’t explain, inspect the albedo maps.

Another important thing is that albedo maps need to be in linear RGB space. When images are displayed on screen, gamma correction needs to be applied to them. If you have only 8 bits to store luminance, 256 values will not be enough for the darks, since our eyes are very sensitive to changes in dark shades. Without increasing the number of levels, you would see the banding artefacts we discussed earlier. But we can apply a non-linear transform and store the pixels of the image in gamma space. If images are 8 bits, this is going to be always the case, but then the renderer will be responsible of converting the image to linear space before using it, because irradiance is only additive provided that everything is in linear space. If a material looks too bright, it could be that the albedo was saved in linear RGB but the renderer is mistakenly undoing the gamma. In the opposite mistake, the albedo could look too dark. All the problems with colour spaces also apply.

The albedo map in the right image is saved in linear RGB. Both albedos are previewed correctly in macOS because it knows how to interpret the colour profile, but V-Ray is applying the inverse gamma when it shouldn’t, resulting in a dark coat.

Normal maps describe the normal direction of a surface. What in normal jargon we’d call the “texture” of a piece of cloth, mostly refers to what normal maps are responsible of. A flat T-shirt is not really flat. You can see the depth of the threads if you look closely. Given enough computer power we could scan the exact geometry and use it as it is, without need of normal maps. But what we do to save resources is scan a tiny piece, and “bake” that geometry into a normal map, so we know how light will behave at a certain pixel, without needing to store all the exact geometry. If the normal map is missing, renders will lack “texture”, because light will look flat and boring.

When the normal map is missing, materials lack “texture” because light acts as if the surface was flat.

If you go back to the light equation, notice how the normal influences every light term, either diffuse or specular. Here’s an example of a purely diffuse material and the effect of the normal map on it:

The left image has no normal maps. The right image has a normal map. The shade it produces introduces depth to the material.

Environment maps

Environment maps are a special type of texture used to cheat a bit with lights. Textures in previous section were things applied to the object material, but environment maps are a substitute for lights. You capture a 360-degree image (or rather, 4π steradians, the whole sphere) in multiple exposures, and then you combine them into a single HDR map. After that, the software applies an integral over it to obtain an irradiance map (it looks like a blurred version of the image). If you want to know the irradiance value from a certain direction, you can simply sample the irradiance map. For specular reflections, you can directly sample the image.

Screenshot of HDRI Haven website, where you can find plenty of HDRI maps under Creative Commons license.

As mentioned earlier, if you notice lack of contrast in your image, it could be that your light source is not bright enough. If you are using an environment map, that means you didn’t capture enough exposure and your light just saturated to a bright spot. If you try to fix that afterwards by applying some gamma curve to the image, you may fix the contrast, but you may start experiencing banding artefacts at different luminance values.

Again, all the problems that we already mentioned at the beginning about colour spaces and image encoding apply here as well. Be very careful with your environment map, because if you get this wrong, all the lighting will be wrong, and it will be hard to decouple from all the other issues we have already mentioned. If you aren’t sure if the funny colours are due to materials or lights, try rendering white objects with that light, and also try replacing your light with some well known outdoors and indoors settings, and then look how the materials in your object look like.

Conclusion

This has been a long journey backwards. Quite thorough, but not complete. Follow the bibliography and links for more details. I hope that by reading this guide and by looking at the examples, people can start to classify different kinds of errors in renders and troubleshoot where necessary. To summarise:

- first make sure you are communicating properly and that you use a common vocabulary;

- make sure everyone involved has some means to see the same image, even if that means using a smartphone screen;

- make sure images are saved in the correct format and with the correct colour space;

- make sure the ray-tracer has the correct quality settings;

- make sure there are no textures missing in the materials used in the 3D scene, and that those textures have also been created following the criteria above;

- make sure your light covers the range of luminance it’s supposed to, and that it has the right colour.

And rest your eyes from time to time!

References

[1] Richard D. Zakia. Perception & Imaging. Focal Press, 2nd edition, 2002.

[2] Tom Stafford, Matt Webb. Mind Hacks, Tips & Tools for Using Your Brain. O’Reilly, 2005.

[3] Tomas Akenine-Möller, Eric Haines, Naty Hoffman. Real-Time Rendering, Third Edition. A K Peters, 2008.